507 words

3 minutes

[CS5446] Reinforcement Learning Generalization

CAUTIONProblem: Tabular representation cannot scale big

Two solutions:

- Function Approximation

- Policy Search

Function Approximation

- compact representation of true utility function

- input: states

- output: utility value

- better generalization

Linear Function Function Approximation

- Use Linear function to approximate

Approximate Monte Carlo Learning

- Supervised learning

- update parameters after each trial

- L2 loss:

- Update:

- for each parameter

Approximate Temporal Difference Learning

- a.k.a semi-gradient

- Utility:

- Q-Learning:

NOTEApproximate MC / TD are similar to MC / TD but is calculated for each parameters, multiplied by its gradient.

Issues

- The deadly triad

- may not converge if: function approx + bootstrapping + off-policy

- Catastropic forgetting:

- happen when over-trained, forget about the dangerous zone (訓練太久後,只訓練 optimal path,就忘記危險的地方。之後若走到那邊容易出事)

- Solution: Experience Replay

- Replay trials to ensure utility function still accurate for parts no longer visited

Non-Linear Function Approximation

- deep reinforcement learning, use gradient descent for back propogation

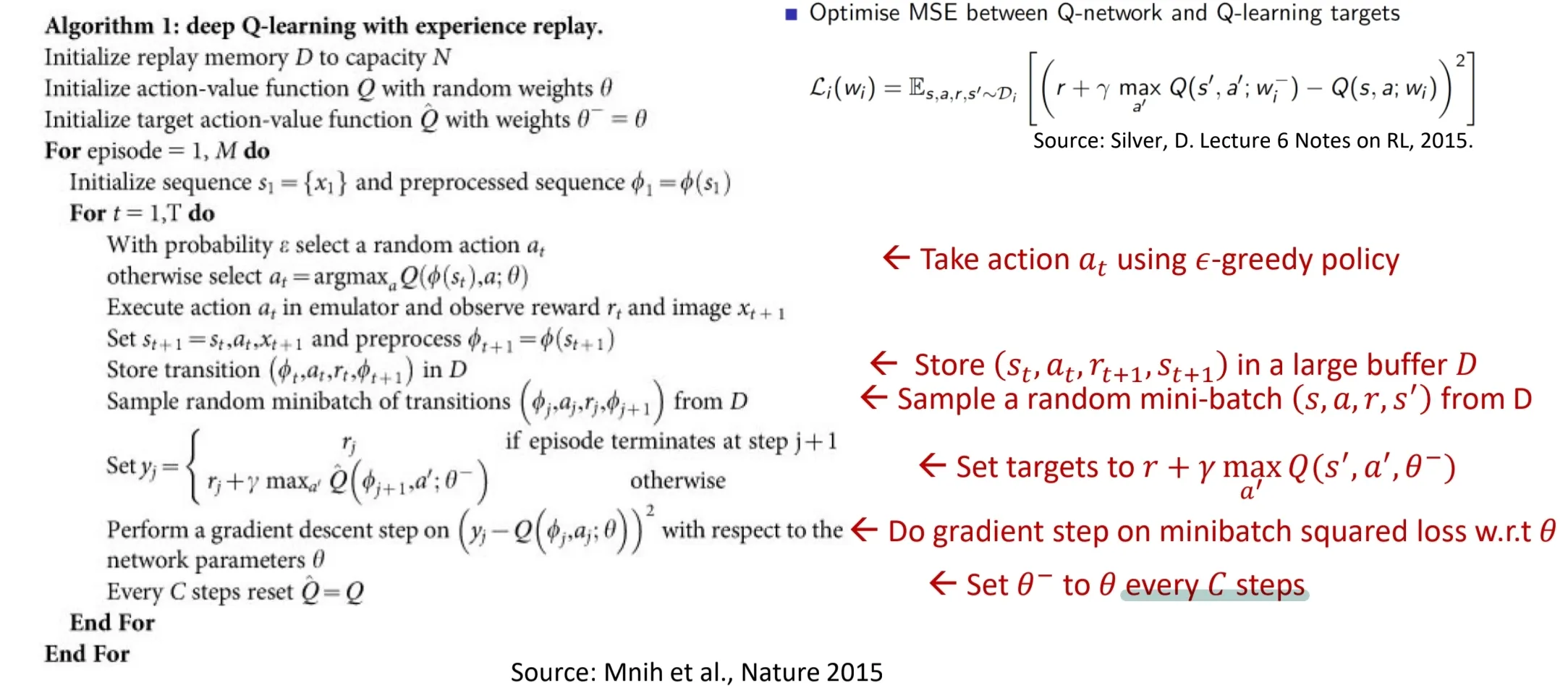

- Deep Q-Network (DQN)

- uses experience replay with fixed Q-target

Policy Search

- (find good policy)

- Problem:

- if max, else .

- discrete, cannot use gradient

- Solution: Stochastic Policy (probability)

- Use Softmax function differentiable

- use gradient descend to update

NOTEPolicy search estimates the policy function, but only care if it leads to optimal policy, doesn’t care whether the estimation is close to true utility.

- REINFORCE

- Monte-Carlo policy gradient

- high variance => use baseline (center the return to reduce variance)

- Advantage function , where is the baseline

Problem of policy gradient

- unstable returns bad updated FAIL!

- wants to restrict the update (限制每次更新的變動範圍)

- Solution: Minorize Maximization

- use a simpler objective (is the lower bound of the true one) to replace the true one .

- result of is guaranteed to , therefore we maximize

- maximize the simpler objective

- guarantee monotonic policy improvement

- use a simpler objective (is the lower bound of the true one) to replace the true one .

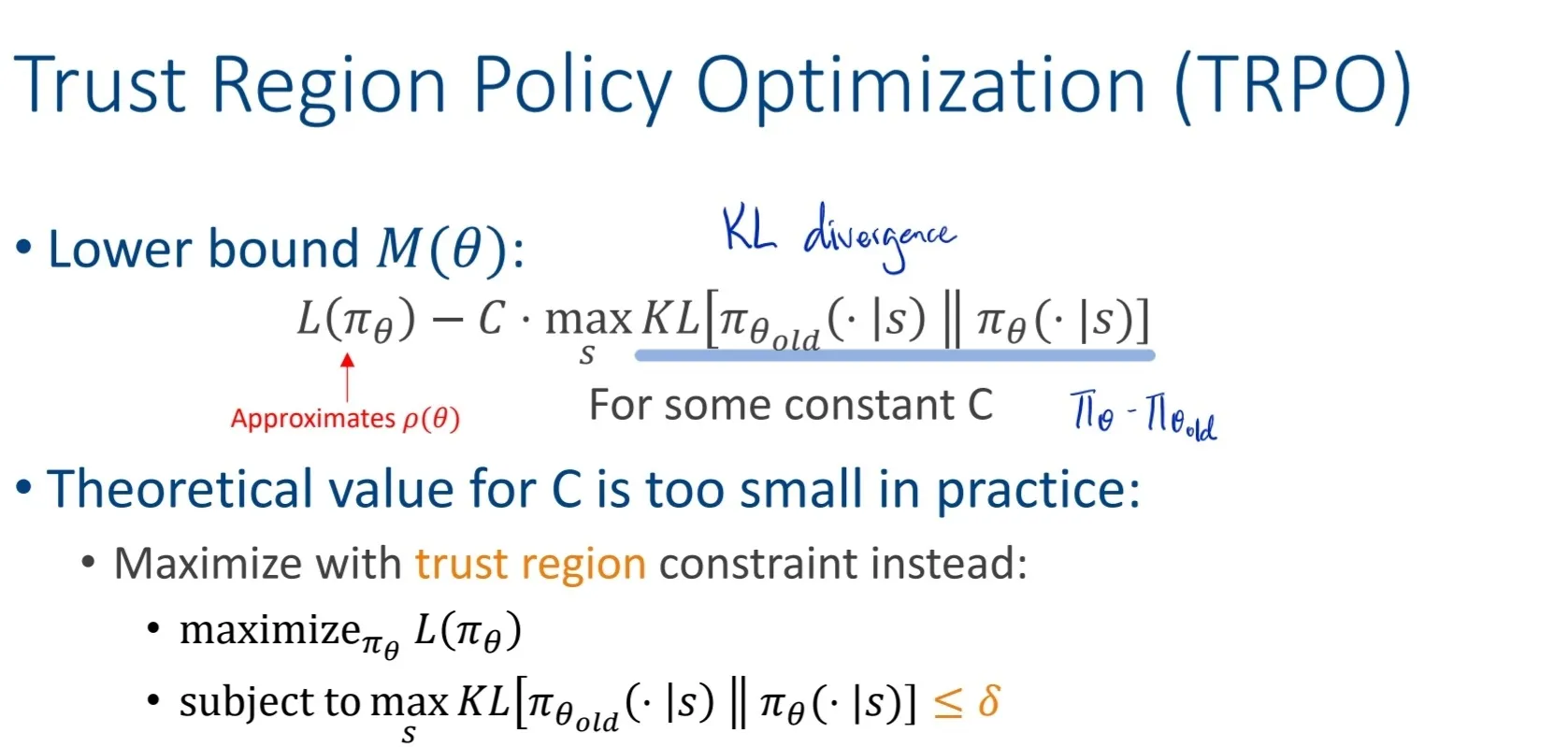

Trust Region Policy Optimization (TRPO)

- uses lower bound (KL divergence) to limit the change per update, to ensure that the update is gradual and stable, moving toward the optimal action

- the max KL diverge to

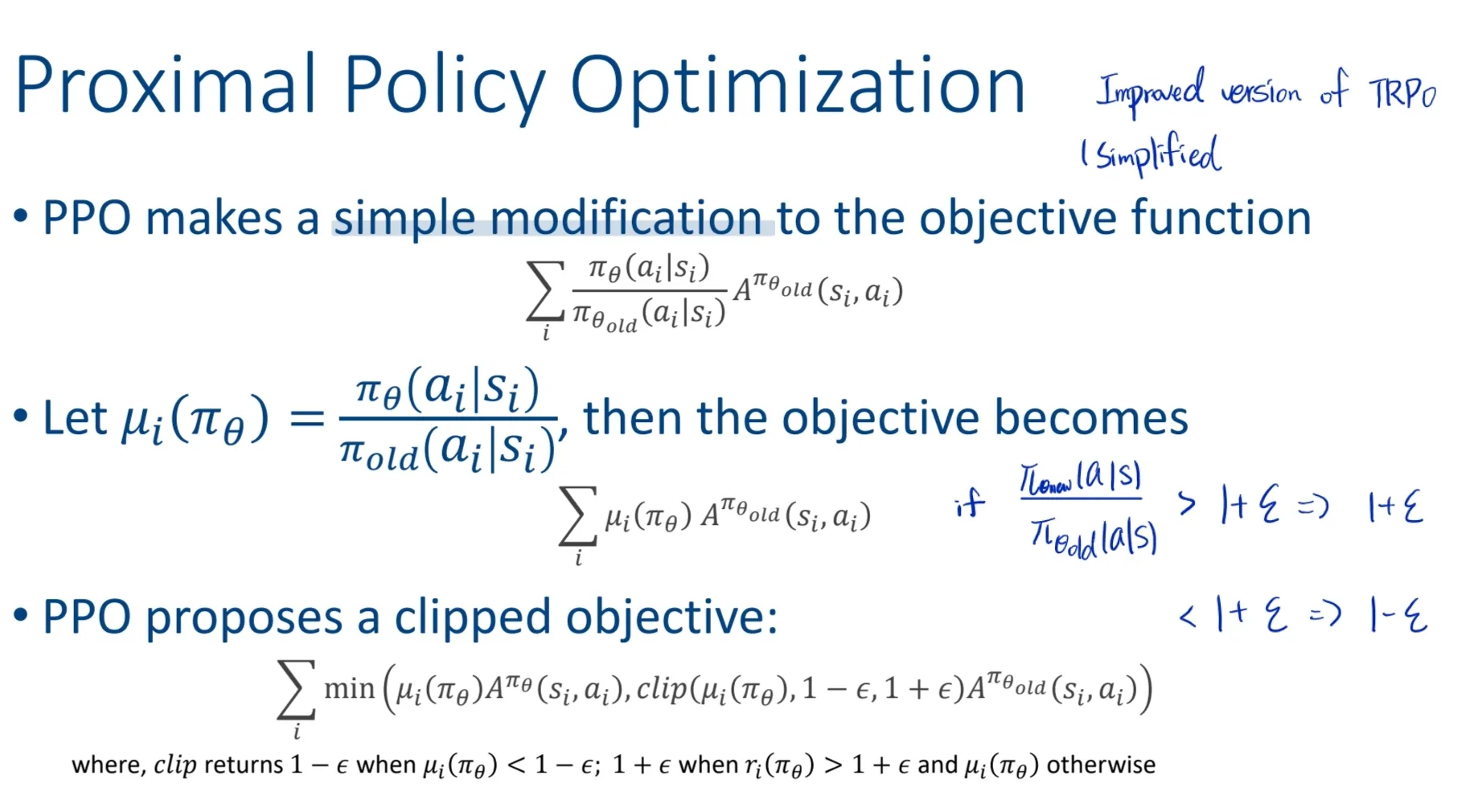

Proximal Policy Optimization (PPO)

- TRPO is too computationally complex

- uses clipped objective to limit updates

- must be in the range of

- if , then clip to

- if , then clip to

- 限制update的幅度介於 之間

Function approximation Policy search

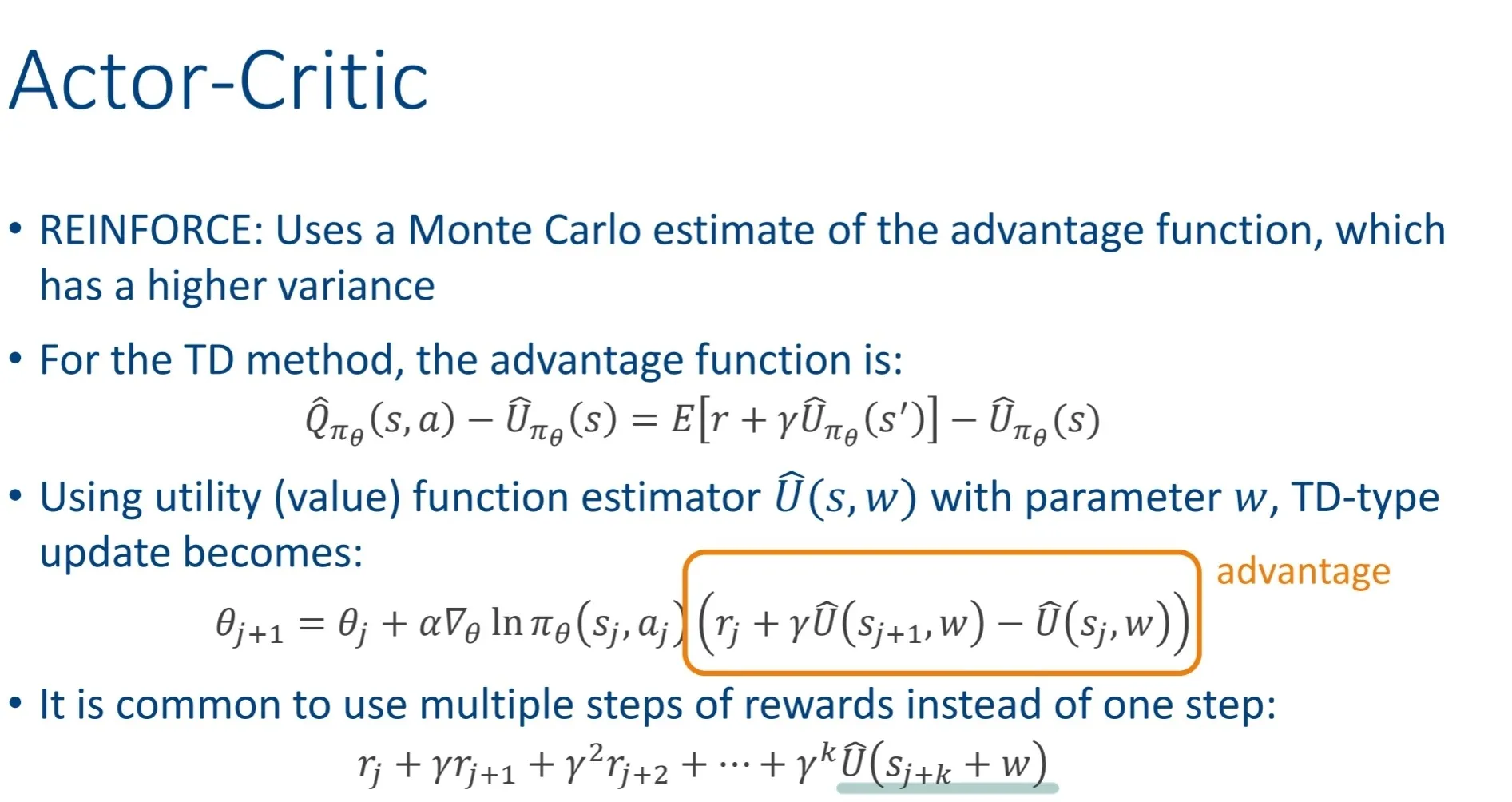

- Actor-Critic methods

- To estimate both utility and policy

- Learns a policy (actor) that takes action

- Also learns an utility function (critic) for evaluating the actor’s decisions

- The actor is running policy search

- The critic is running value-function approximation

- Actor adjusts policy based on feedback from critic (using policy gradient)

- The advantage estimate shows how much the currect policy is better than the average.

- The policy gradient update is

[CS5446] Reinforcement Learning Generalization

https://itsjeremyhsieh.github.io/posts/cs5446-6-reinforcement-learning-generalization/