Motivation#

- How to find unknown parameters of clusters in unsupervised learning?

- Which cluster does each data belong to?

K- Means Algorithm#

Non-probabilistic, 100% assign to that single cluster

- Given:

- data set {x1,...xN}

- number of clusters K

- Find:

- K cluster centers {μ1,...,μK}

- assign each data x to a cluster center

1-of-K coding:#

- for each data xn, rnk∈0,1 s.t∑Krnk=1

- if data xn is in cluster k, then rnk=1, otherwise 0.

- Hard assignment

- each data is assigned to only 1 cluster

- goal is to minimize J=∑N∑Krnk∣∣xn−μk∣∣2 s.t∑krnk=1

Algorithm:#

- Initialization: randomly choose {μk}

- Assignment: fixed {μk}, minimize J w.r.t {rnk}

- argminrn∑Krnk∣∣xn−μk∣∣2 s.t∑krnk=1

- Update: fixed {rnk}, minimize J w.r.t {μk}

- ∂μk∂J=2∑Nrnk(xn−μk)=0

- μk=∑Nrnk∑Nrnkxn (mean of all points assigned to cluster k)

- Repeat step 2 and 3 until converge

Can we use probability to assign? (Soft assignment)

Probabilistic Approach#

Gaussian Mixture Model#

- linear superposition of multiple Gaussians

- probability distribution:

- p(x)=∑KπKN(x∣μK,∑K) (K Gaussians)

- μK: mean

- ∑K: covariance

- ∑KπK=1: mixing coefficient (weight of each Gaussians in the model)

- 1-of-K representation: ZK=1⇒Zj=k=0

- assignment of x to kth Gaussian

- ZK∈{0,1}, ∑KZK=1

- p(z) is categorical distribution

- p(z) ∏KπKZk=catZ[π]

- if Zk=1 (Z is in kth Gaussian), πKZk=πK, 0 otherwise

- 0≤πk≤1,∑KπK=1

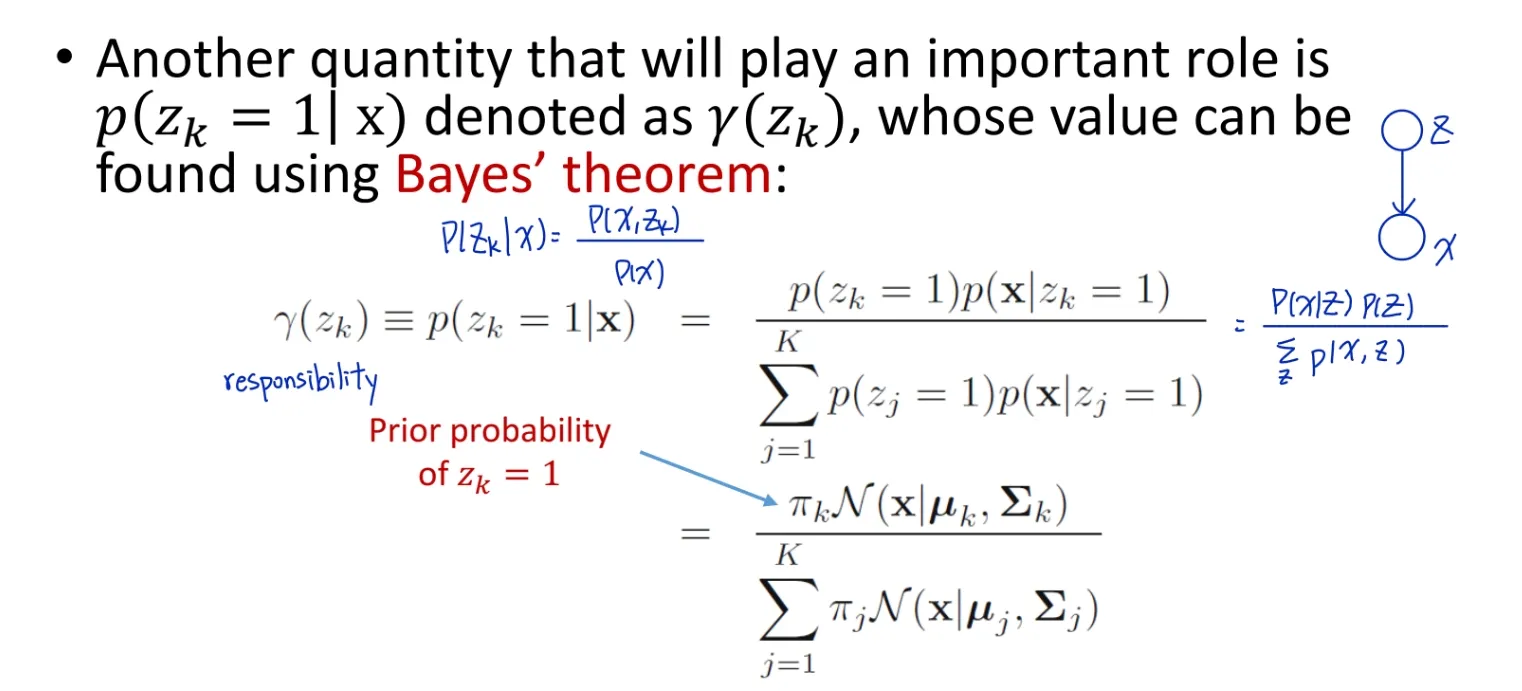

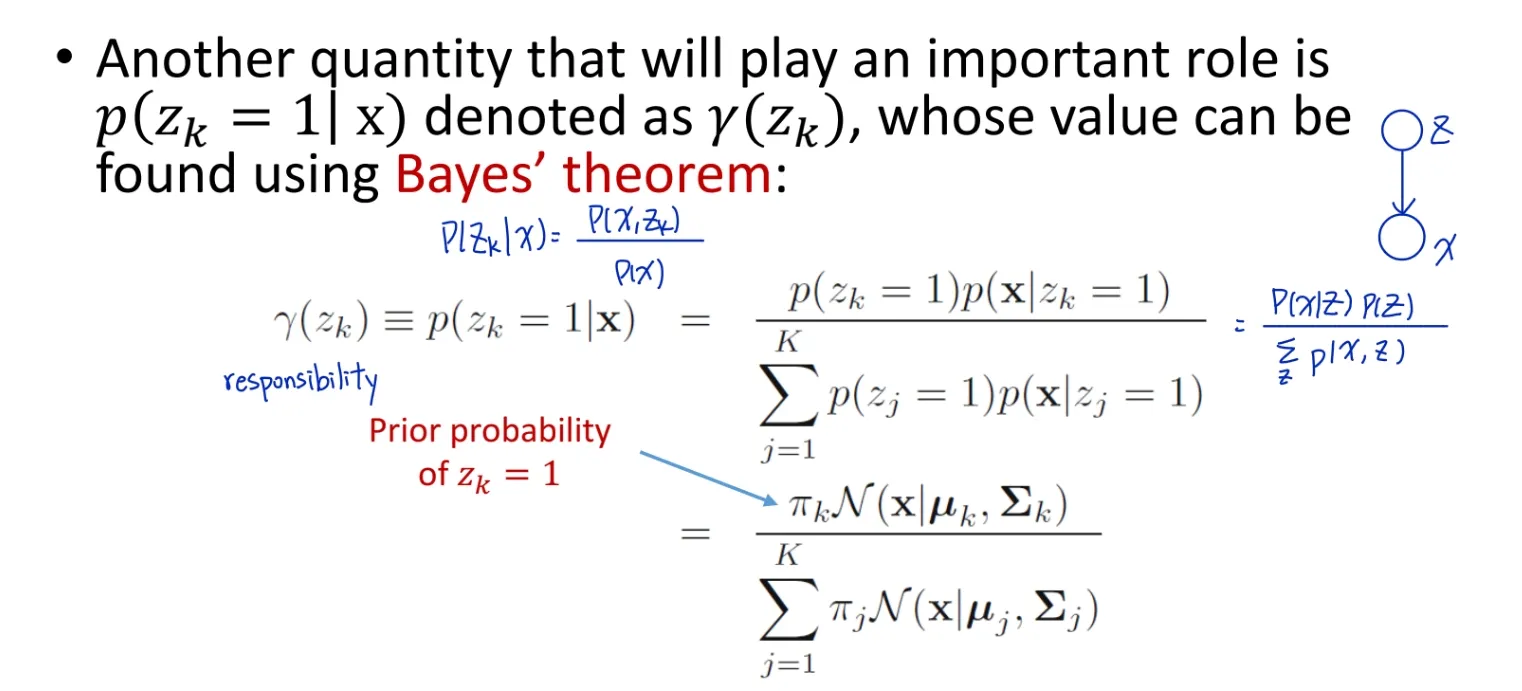

- probability of x belonging to each distribution

- p(x∣zk=1)=N(x∣μk,∑k)

⇒p(x∣Z)=∏KN(x∣μk,∑k)Zk - p(x,z)=p(z)p(x∣z)

⇒p(x)=∑Kp(z)p(x∣z)=∑K∏KπKZkN(x∣μK,∑K)Zk=∑KπKN(x∣μK,∑K)

- Responsibility

Maximum log-likelihood#

- we try to get the partial derivative w.r.t μk,∑k,πk

- argmaxθlnp(x1,...,xN∣θ)=argmaxπ,ν,∑∑Nln∑KπKN(xn∣μk,∑k), ∑ is inside ln ⇒ no closed-form

EM for Gaussian Mixtures#

- θ=πk,μk,∑k

- Initialize πk,μk,∑k

- Expectation step: evaluate responsibilies r(Z)

- r(Znk)=∑kπkN(xn∣μk,∑k)πkN(xn∣μk,∑k)

- Maximization step: update πk,μk,∑k

- μk=Nk1∑Nr(Znk)xn

- ∑k=Nk1∑Nr(Znk)(xn−μk)(xn−μk)T

- πk=NNk

- where Nk=∑Nr(Znk)

- Evaluate log-likelihood

- ln p(x∣μ,∑,π)=∑Nln(∑KπKN(xn∣μn,∑n))

- check for convergence

- Can run K_Means before initializing for EM algorithm

General EM algorithm#

- Goal: find maximum likelihood solution for models with latent variables

- ln p(x∣θ)=ln(∑Zp(x,Z∣θ))

- no closed form

- we don’t have complete {X,Z}, we can consider maximize the expected value of p(x,z∣θ) w.r.t p(z∣x,θ)

- Initialize μ,∑,π

- Expectation step

- Expectation Q(θ,θold)=∑Zp(z∣x,θold)ln p(x,z∣θ)=ez∣x,θold[ln p(x,z∣θ)]

- Mazimization step

- θnew=argmaxθQ(θ,θold)=argmaxθ∑Zp(Z∣x,θold)ln p(x,Z∣theta)

- log is now insize ∑ ⇒ closed form

- Check for convergence of either log likelihood or parameter value

- θold←θnew

Theory behind EM algorithm#

- maximize ln p(x∣θ)=ln∑Zp(x,z∣θ) is difficult, we can maximize its lower bound L(q,θ) instead

- lnp(x∣θ)=L(q,θ)+KL(q∣∣p) where

L(q,θ)=∑Zq(z)lnq(z)p(x,z∣theta)

KL(q∣∣p)=−∑Zq(Z)lnq(z)p(z∣x,θ)≥0