Goal: To calculate p(xF∣xE)

- where xF, xE are disjoint subsets

- xF: query node

xE: evidence node - needs to marginalize all nuisance variables xR (xV−(xE,xF))

- p(xF∣xE)=p(xE)p(xF,xE)=∑xFp(xF,xE)∑xRp(xf,xe,xR)⇒O(Kn) (exponential, intractable!)

Variable elimination (the naive way)#

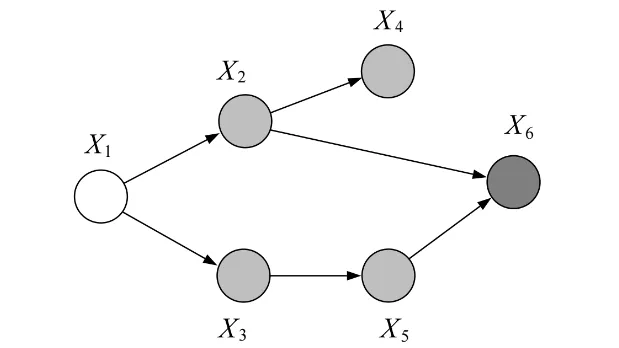

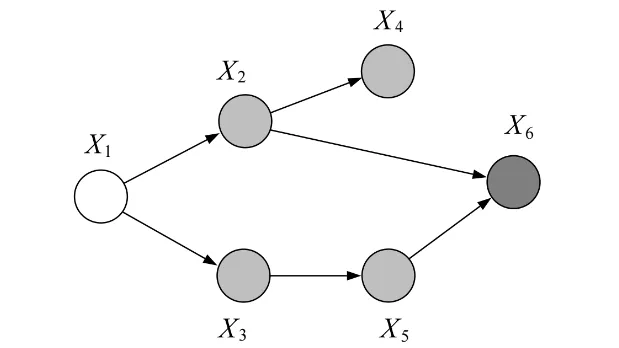

- p(x1,...,x5)=∑x6p(x1)p(x2∣x1)p(x3∣x1)p(x4∣x2)p(x5∣x3)p(x6∣x3,x5)=p(x1)p(x2∣x1)p(x3∣x1)p(x4∣x2)p(x5∣x3)∑x6(x6∣x3,x5)⇒ reduced table size to K3

- When evidence node is observed (x6=xˉ6):

- e.g p(x6∣x2,x5)⇒p(x6=1∣x2,x5)

table from 3D (x2,x5,x6) to 2D (x2,x5), x6=1 - p(x1∣xˉ6)=∑x2∑x3∑x4∑x5p(x1)p(x2∣x1)p(x3∣x1)p(x4∣x2)p(x5∣x3)p(xˉ6∣x3,x5)=p(x1)∑x2p(x2∣x1)∑x3p(x3∣x1)∑x4p(x4∣x2)∑x5p(x5∣x3)p(xˉ6∣x3,x5)=p(x1)∑x2p(x2∣x1)∑x3p(x3∣x1)∑x4p(x4∣x2)×m5(x2,x3)=p(x1)∑x2p(x2∣x1)∑x3p(x3∣x1)m5(x2,x3)∑x4p(x4∣x2)=p(x1)∑x2p(x2∣x1)m4(x2)∑x3p(x3∣x1)m5(x2,x3)=p(x1)∑x2p(x2∣x1)m4(x2)m3(x1,x2)=p(x1)m2(x1)=p(x1,xˉ6)⇒p(x1∣xˉ6)=∑x1p(x1)m2(x1)p(x1)m2(x1)

- mi(Sxi): when performing ∑xi, xsi are (variables in summand −xi)

NOTEConditioning ⇒ Marginalization trick for Notation

- evidence potential: δ(xi,xˉi)={1,ifxi=xˉi0,otherwise

- g(xˉi)=∑xig(xi)δ(xi,xˉi)

- δ(xE,xˉE)=∏i∈Eδ(xi,xˉi)={1,ifxi=xˉ10,otherwise

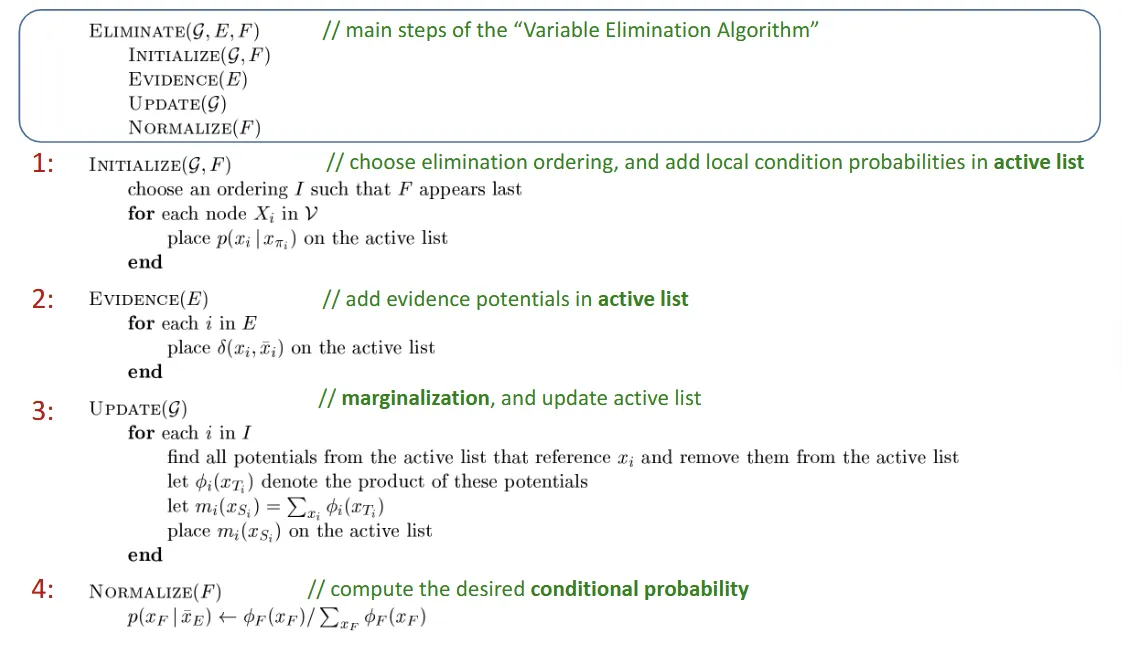

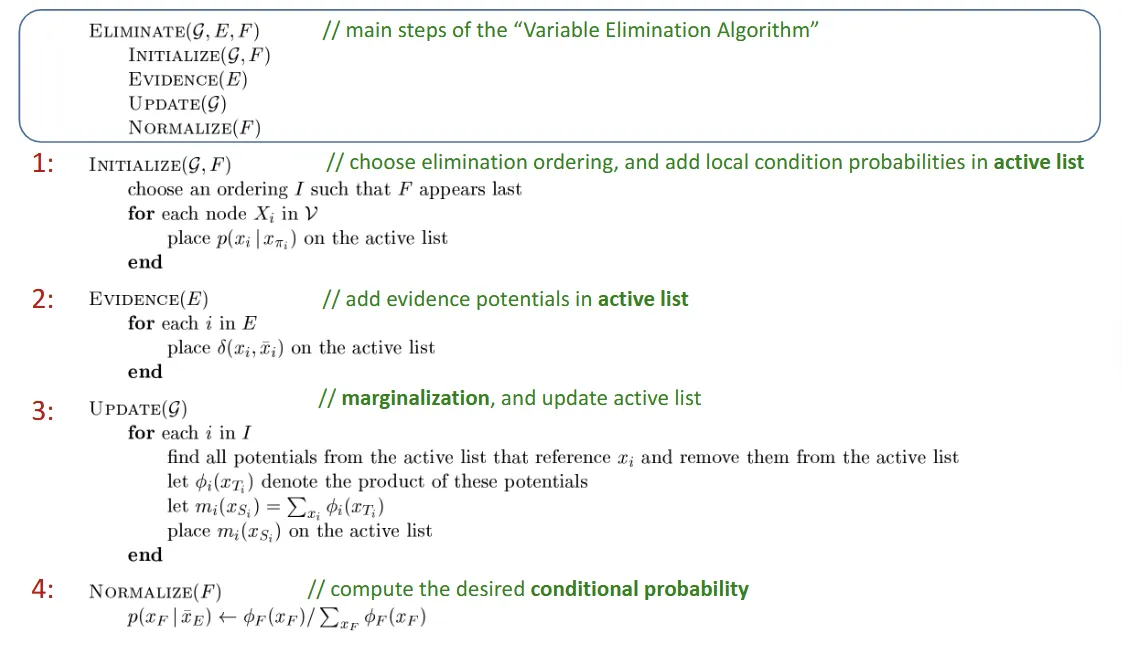

Algorithm of variable elimination#

Variable elimination algorithm for Undirected Graph#

- Only change in initialize step

- local condition (parent-child relation from DGM) → potential of 𝜓xc(xc)

- e.g p(x1,xˉ6)=Z1∑x2∑x3∑x4∑x5𝜓(x1,x2)𝜓(x1,x3)𝜓(x2,x4)𝜓(x3,x5)𝜓(x2,x5,x6)𝜓(x6,xˉ6)

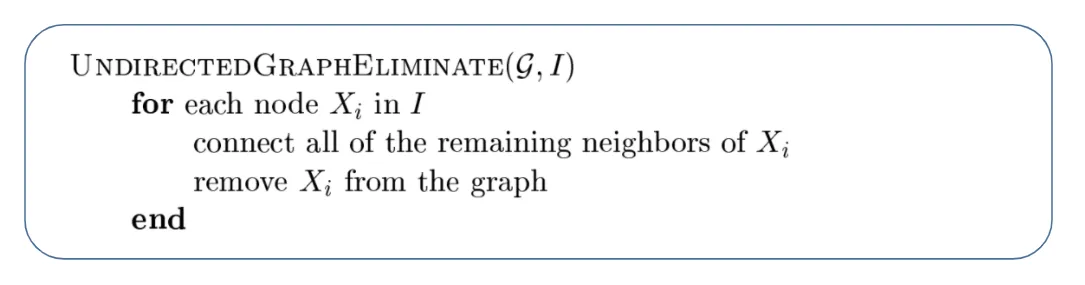

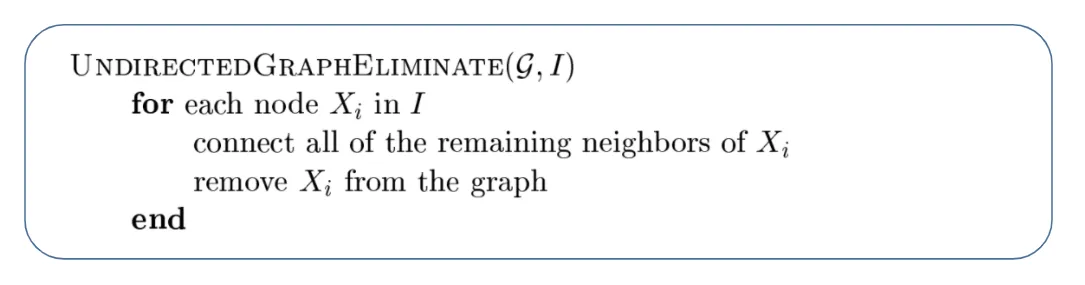

Reconstituted graph#

- Eliminate nodes and connect its (remaining) neighbors following the elimination order

- Reconstituted graph: original graph + new added edges

- complexity: the size of the largest clique in reconstructed graph

- Treewidth: smallest overall complexity −1 (NP-hard)

- we want to minimize the cardinality of largest elimination clique

Moralization#

to trandorm DGM to UGM

- for every node in DGM, link its parents together to trandform to UGM

- So, to transfrom DGM to reconstituted graph

DIRECTEDGRAPHELIMINATE- first we moraliza the DGM,

- then we perform the

UNDIRECTEDGRAPHELIMINATE algorithm

WARNINGWe need to re-run for every query node to get each singleton conditional probability! Very inefficient!

- Sol: use the sum-product algorithm to get all single-node marginals for tree-like structures

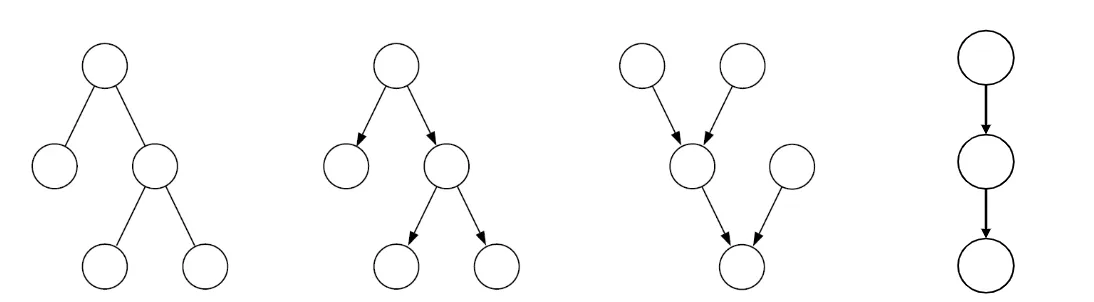

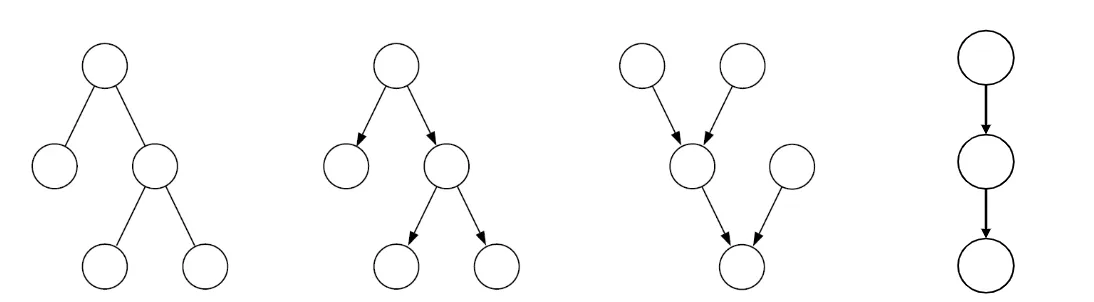

Tree-like structures#

- (a) Undirected tree: no loop

- (b) Directed tree: 1 single parent per node, moralizations will not add link

- (c) Polytree: nodes with more than 1 parent, moralization will lead to loops ⇒ cannot perform sum-product algorithm

- (d) Chain: just another directed tree

Parameterization#

- Undirected tree: only single & pair in cliques

- p(x)=Z1(∏i∈V𝜓(xi)∏(i,j)∈ϵ𝜓(xi,xj))

- Directed tree: simply parent-child relationship

- p(x)=p(xr)∏(i,j)∈ϵp(xj∣xi)

- p(xr) is the root node

- THey are formally identical

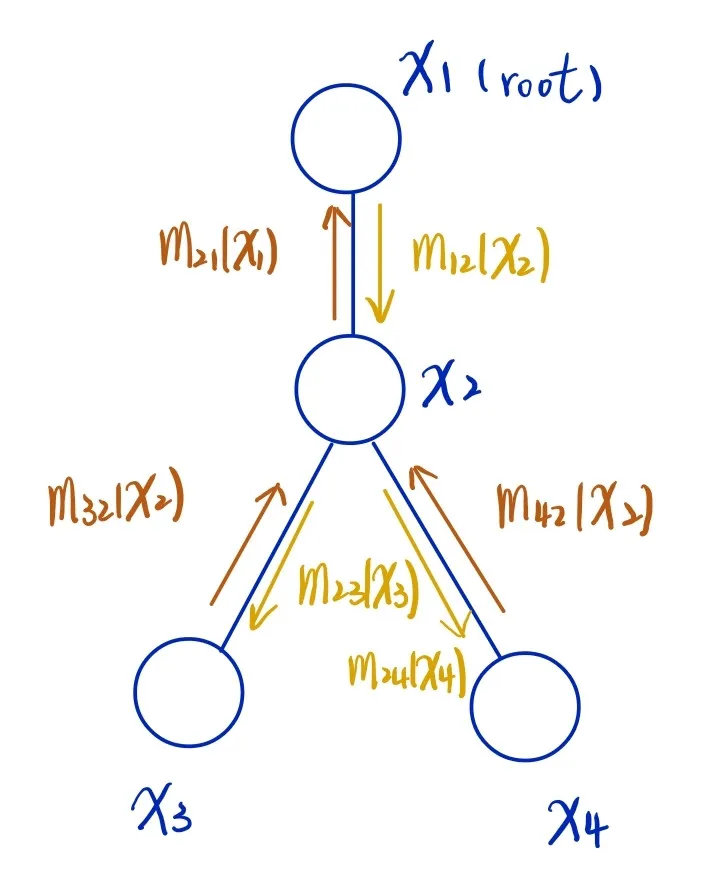

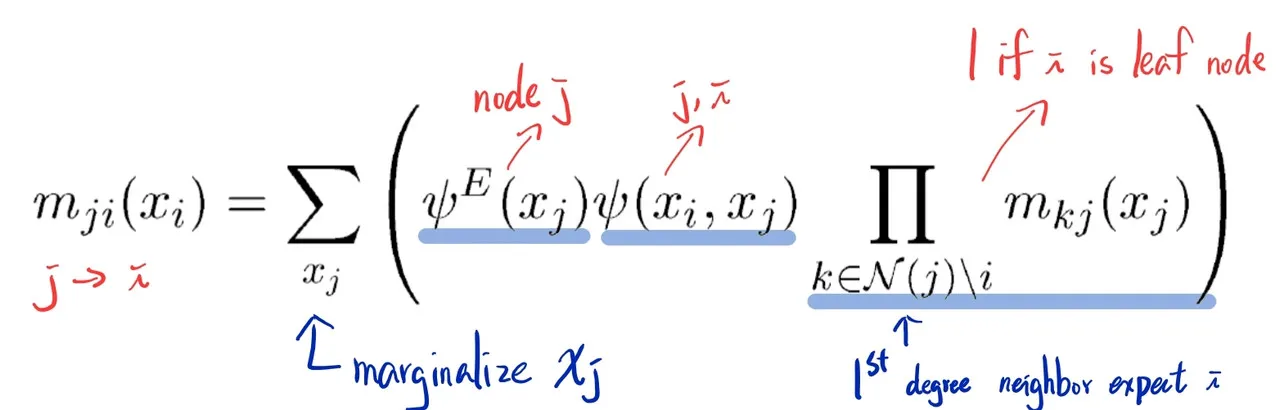

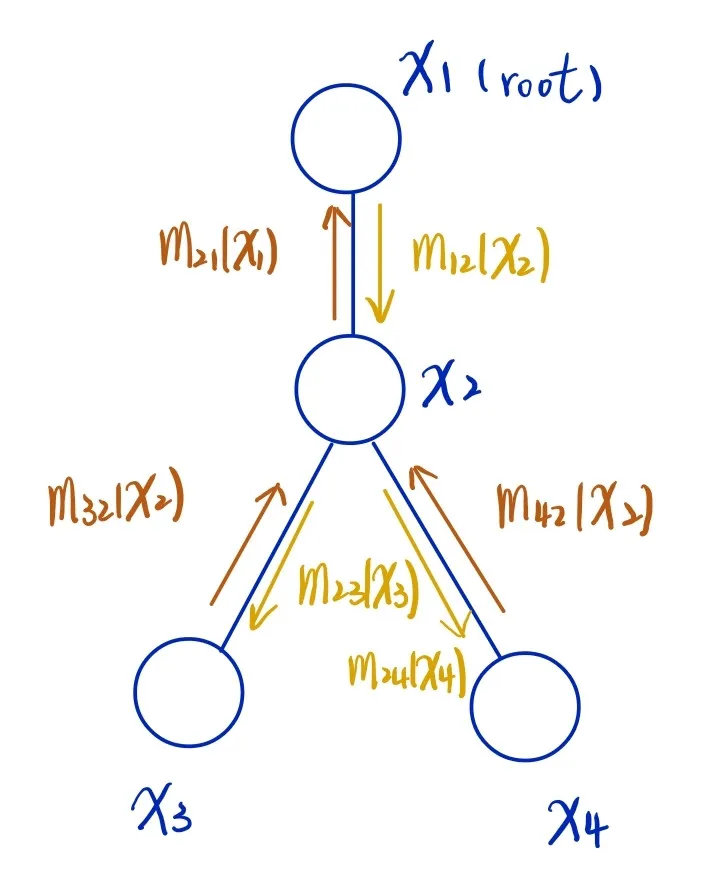

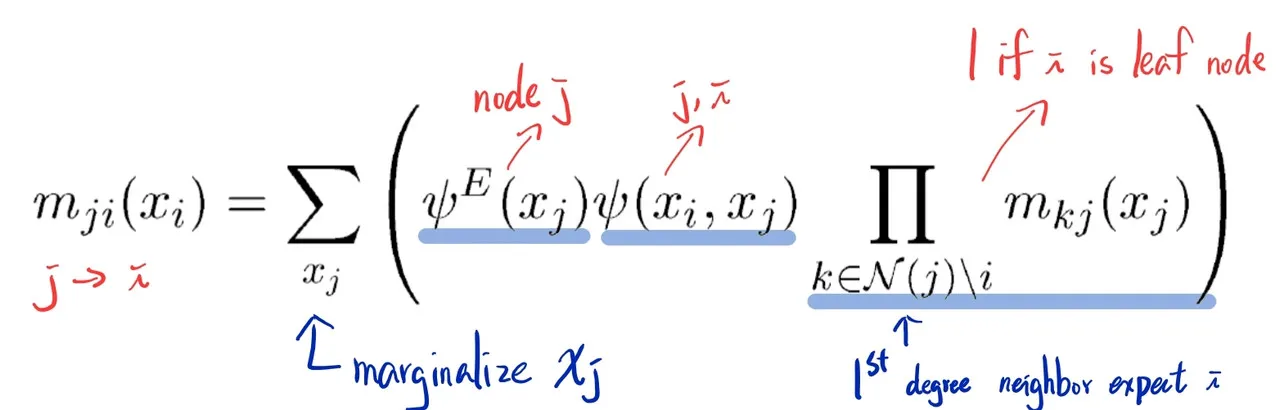

Message-Passing Protocol#

- a node can send a message to a neighbor only when it has received messages from all of its other neighbors (subtree), to ensure the messages passed to uppder node is complete.

Message#

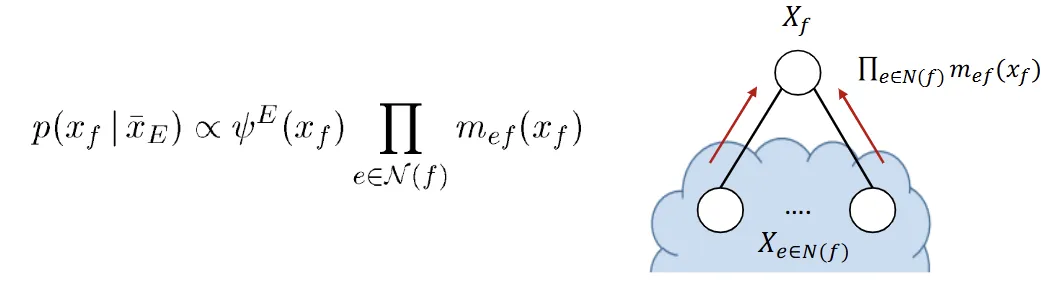

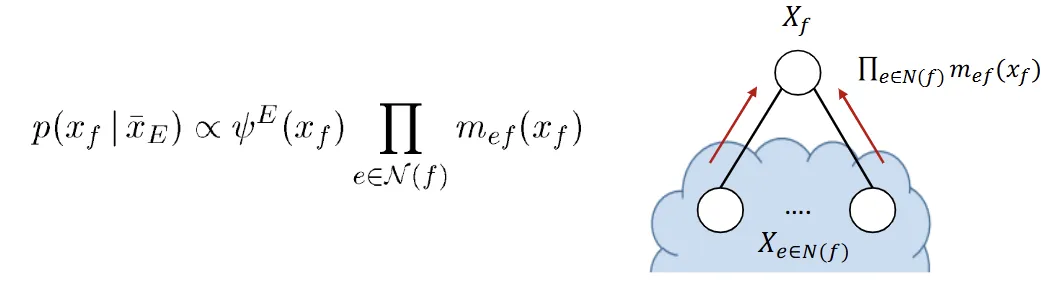

Compute marinal probability#

Sum-Product Algorithm a.k.a Belief Propogation#

- message flow from leaves to root (inward)

- message flow from root to leaves (outward)