Conditional Independence#

xa⊥xc∣xb⇨{p(xa,xc∣xb)=p(xa∣xb)p(xc∣xb)p(xa∣xb,xc)=p(xa∣xb)

5 Rules of Conditional Independence#

R1 Symmetry: x⊥y⇨y⊥x#

R2 Decomposition: x⊥A,b⇨x⊥A & x⊥b#

- pf:

X⊥A,B⇨p(X,A,B)=p(X)p(A,B)p(X,A)=∫p(X,A,B)dB=∫p(X)p(A,B)dB=p(X)∫p(A,B)dB=p(X)p(A)

R2 Reverse: x⊥A & x⊥b (DOESN”T hold)#

- pf:

XOR Probability Counter-Example

R3 Weak Union: X⊥A,B⇨X⊥A∣B & X⊥B∣A#

- pf: X⊥A,B⇨p(X)=p(X∣A,B)

X⊥A,B⇨X⊥B p(X)=p(X∣A,B)=p(X∣A), similar proof with p(X∣B)

R3 Reverse: X⊥A∣B & X⊥B∣A ⇨ X⊥A,B#

- pf:

X⊥A∣B⇒p(x∣A,B)=p(x∣B)

X⊥B∣A⇒p(x∣A,B)=p(x∣A)

p(x)=∫p(x,A)dA=∫p(c∣A)p(A)dA=p(x∣A)∫p(A)dA=p(x∣B)

R4 Contraction: X⊥A∣B and X⊥B⇨X⊥{A,B}#

- pf:

X⊥A∣B and X⊥B⇨p(X∣{A,B})=p(X∣B)=p(X)⇨X⊥A,B

R4 Reverse: X⊥{A,B} ⇨ X⊥A∣B and X⊥B#

- pf:

by weak union, x⊥{A,B} ⇨ x⊥A∣B by decomposition, x⊥{A,B} ⇨ x⊥B

R5 Intersection: X⊥Y∣W,Z and X⊥W∣Y,Z⇨X⊥Y,W∣Z#

- pf: X⊥Y∣W,Z⇨p(X∣Y,W,Z)=p(X∣W,Z)

X⊥W∣Y,Z⇨p(X∣Y,W,Z)=p(X∣Y,Z)

p(X∣W,Z)=p(X∣Y,Z)

p(X∣Z)=∫p(X.W∣Z)dW=∫p(X∣W,Z)p(W∣Z)dW=p(X∣Y,Z∫p(W∣Z)dW=p(X∣Y,Z)

p(X∣W,Y,Z)=p(X∣Y,Z)=p(X∣Z)⇨X⊥W,Y∣Z

R5 Reverse: X⊥{Y,W}∣Z ⇨ X⊥Y∣W,Z and X⊥W∣Y,Z#

- pf:

X⊥{Y,W}∣Z⇒p(x∣Z)=p(x∣W,Y,Z)

X⊥{Y,W}∣Z⇒x⊥w∣Z (decomposition)

p(x∣Z)=p(x∣W,Y,Z)=p(x∣Y,Z)⇒x⊥W∣{Y,Z}

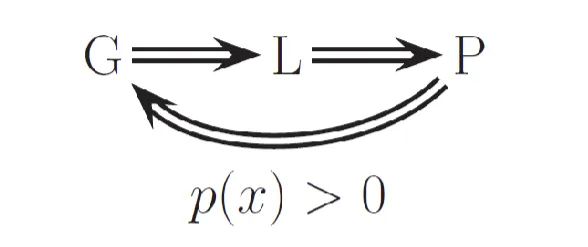

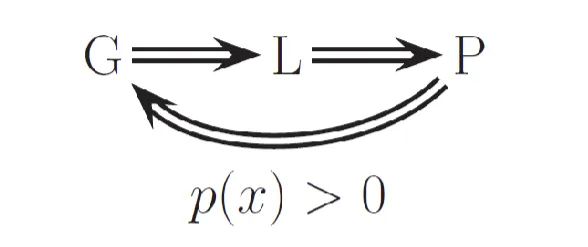

Independence Map (I-Map)#

- I(p) represents all independencies in p(x1,...,xN)

- G is valid if I(G)≤I(P)

Markov Property#

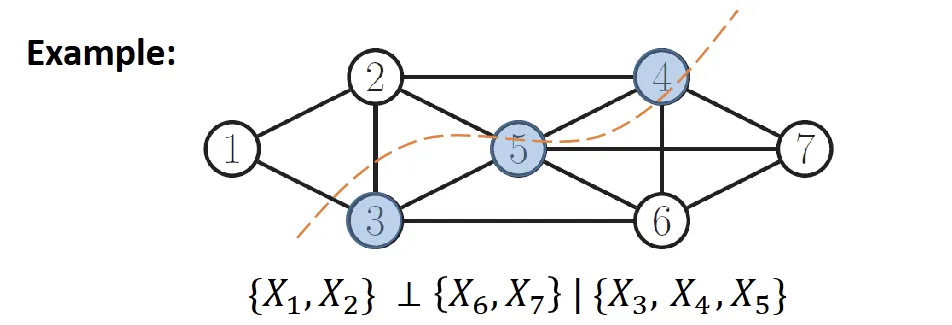

Global Markov Property#

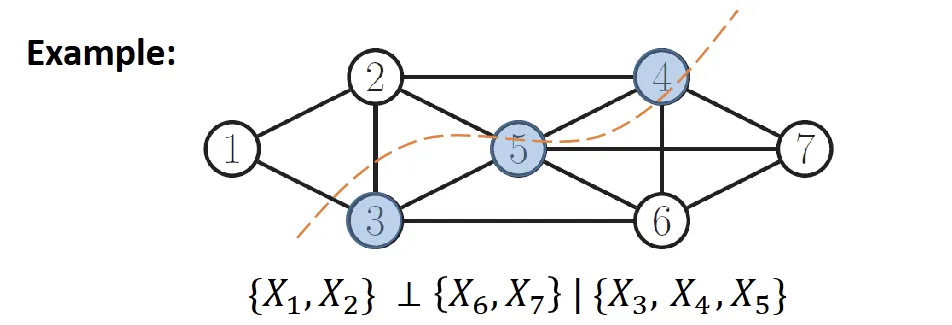

- xA⊥xB∣xC iff C seperated A from B

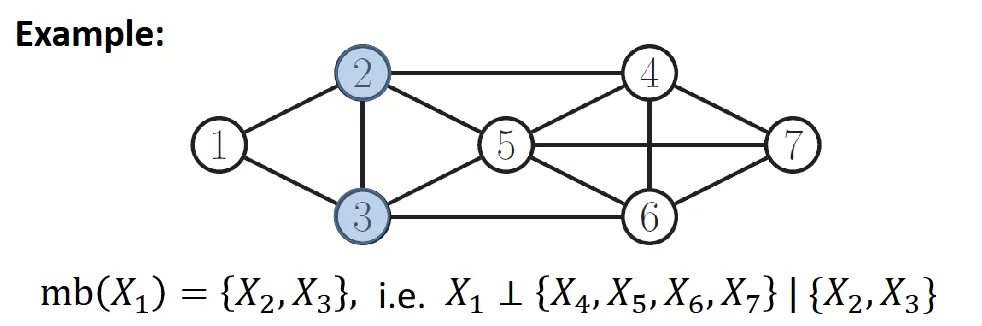

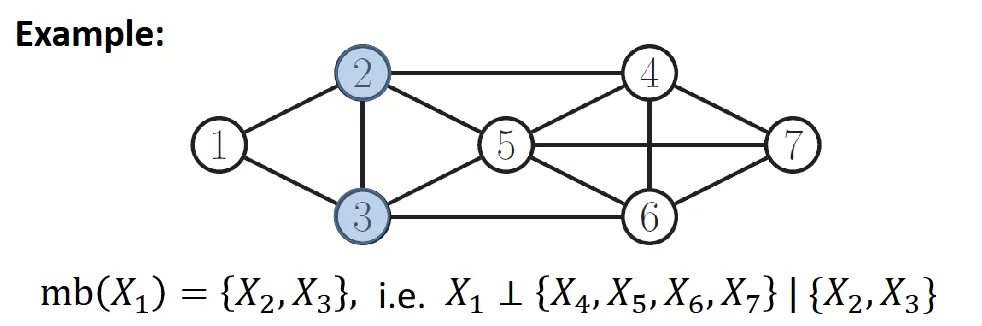

Local Markov Property#

- xS⊥V\{mb(xs),xs}∣mb(xs), where mb(xs) is the markov blanket of xs

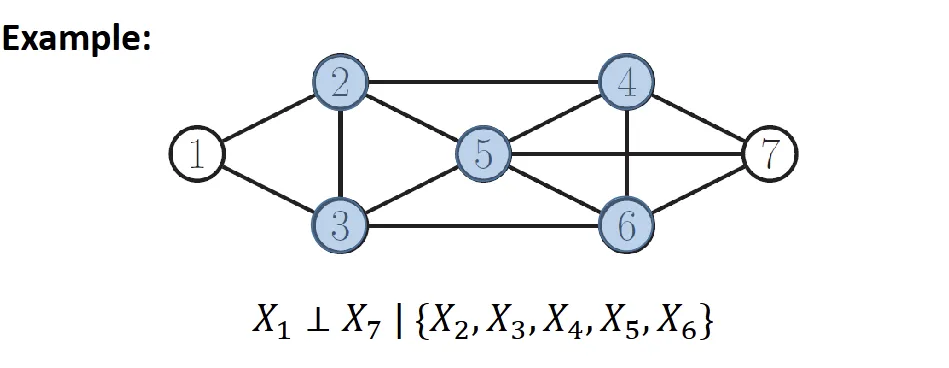

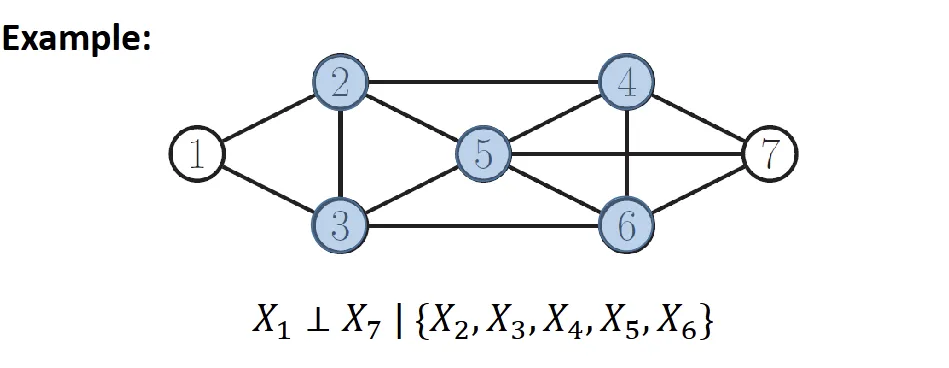

Pairwise Markov Property#

- xs⊥xt∣v\{xs,xt}

- xs,xt condition independence given the rest if no direct edge between

Parameterization of MRF#

- if x and y are not directed linked ⇒ they are conditionally independent

⇒ must place x and y in different factors (local functions) - 𝜓(xc): the local function of maximal clique c

- maximal clique: clique that cannot include additional nodes (fully connected subset of nodes)

Hammersley-Clifford Theorem#

- p(y∣θ)=Z(θ)1∏c𝜓c(yc∣θc (product of local function of max cliques)

- Z(θ): for normalization (constant)

Log-Linear Potential Function (Maximum entropy)#

- logp(y∣θ)=∑cϕc(yc)Tθc−logZ(θ) (can reduce parameter)

Conditional Random Field (Discriminative random field)#

- p(y∣x,w)=Z(x,w)1∏c𝜓c(yc∣x,w)

- 𝜓c(yc∣x,w)=exp(wcTϕ(x,yc)))