172 words

1 minutes

[CS5242] Regularization

Regularization

- modification intended to reduce generalization (validation) error but not training error

- reduce variance significantly while not overly increase bias

Parameter Norm Penalties

- add penalty to regularize

- prevent overfitting

- L1 norm regularization:

- L2 norm regularization:

Parameter sharing

- force the set of parameter to be equal

- only a subset of the parmeters need to be stored in memory

- e.g CNN

Dropout

- Training: randomly set some neurons to with probability

- can be different each iteration

- computational cheap

- hidden layer must perform well regardless of other hidden units

Data augmentation

- synthesize data by transforming the original dataset

- incorporate invariances

- e.g

Injecting noise

- add noise to simulate real-world situation

- Input: train with noise injected data

- Hidden units: noise inject to hidden layers

- Weights: increase stability for learned functions and parameters. -e.g RNN

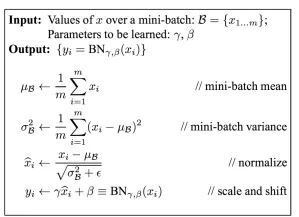

Batch normalization (BN)

- reduces internal covariate shift by normalizaing inputs of each layer

- normalize → scale and shift

: average, : standard deviation

: scale, : shift- and are parameters to learn

[CS5242] Regularization

https://itsjeremyhsieh.github.io/posts/cs5242-5-regulatization/